Organisation

| Time | only SS |

|---|---|

| Time | Thursday, 08.15h-11.30h |

| Room | 220 |

| Credits | 2 SWS / 5 ECTS |

| Exam | Lab Work |

Announcements

- First lesson in term SS 24: 21.03.24

Prerequisites for participation

- Successful completion of the CSM Machine Learning Lecture.

Structure, Contents, Documents

In this lab student-groups must implement selected applications of Machine Learning in particular Deep Learning. All applications must be implemented in Python Jupyter-Notebooks. The Python machine learning libraries scikit-learn, Tensorflow and Keras will be applied.

I recommend to download Anaconda for Python 3.8 or newer. Then a new virtual environment shall be created by

conda create -n pia python=3.8 anaconda.

In this virtual environment use pip install to install tensorflow, keras, gensim, and other required modules.

Each of the lab-exercises (applications) will be graded. The final grad of the course is the mean of the exercise grades.

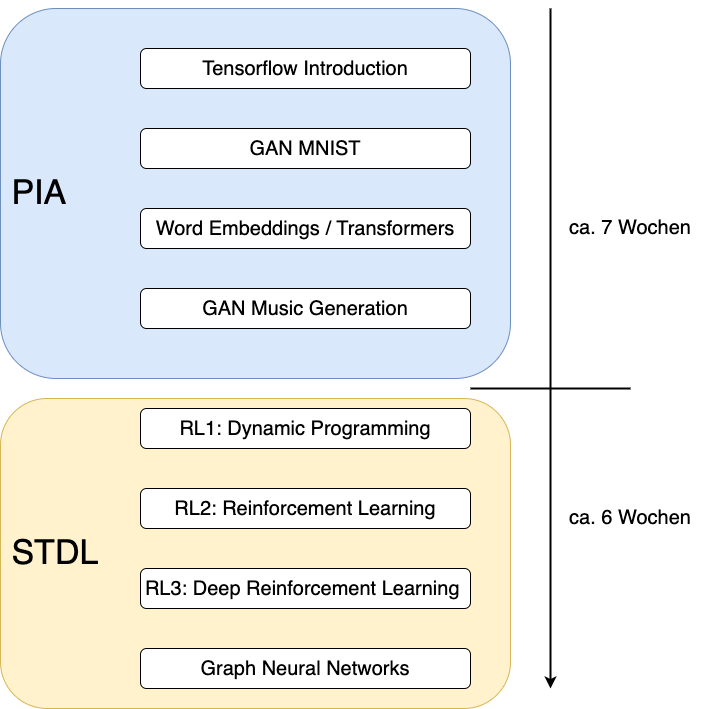

New, from term SS21 on is the combination of the lectures PIA and Selected Topics of Deep Learning (STDL). Actually the lab exercises of PIA has been extended and partitioned into these two lectures. As depicted below the first 4 exercises are assigned to PIA, the remaining 4 exercises are assigned to STDL. The 2 modules therefore run consecutively. Students, which like to execute all exercises must register for PIA and STDL. It is also possible to register only for PIA. It is not possible to register only for STDL, because it makes no sense to skip the intro in the first part and start with the 5.th exercise.

Preliminary Timeplan and link to resources for both parts - PIA and STDL

| Lecture | Contents |

|---|---|

| 21.03.2024 | Short Introduction and Registration |

| 28.03.2024 | Introduction into Tensorflow |

| 04.04.2024 | Implementation of GAN for MNIST |

| 11.04.2024 | Implementation of GAN for MNIST |

| 18.04.2024 | Word Embeddings Transformers |

| 25.04.2024 | Word Embeddings Transformers |

| 02.05.2024 | RNN Music Generation |

| 09.05.2024 | Christi Himmelfahrt |

| 16.05.2024 | RNN Music Generation |

| ———— | —————– |

| 23.05.2024 | Graph Neural Networks |

| 30.05.2024 | Fronleichnam |

| 06.06.2024 | RL 1: Dynamic Programming |

| 13.06.2024 | RL 2: Reinforcement Learning |

| 20.06.2024 | RL 3: Deep Reinforcement Learning |

| 27.06.2024 | RL 3: Deep Reinforcement Learning |